Latency Considerations

We’re trying to address a practical approach to network latency modeling that blends deterministic physics-based latency (distance, propagation medium) with probabilistic or rule-based latency (traffic, routing, queuing, and contention effects).

Latency in network transmission is the time it takes for data to travel between two points in a network and is influenced by physical distance, media type, node/router processing, queuing, and traffic load. Beyond pure distance and transmission medium, latency can also spike due to congestion, device processing constraints, routing inefficiencies, protocol overheads, and link aggregation rules, such as adding a fixed latency per additional route or based on throughput.

Factors Influencing Latency

· Physical Distance: Signal propagation delay is proportional to the distance traveled, e.g., fiber at 1 ms per 60-100 miles is typical.

· Transmission Medium: Latency differs across fiber, cable, DSL, wireless, and satellite links due to their different physical and protocol properties.

· Network Devices: Routers and switches introduce processing delays. Aggregating multiple high-capacity links or complex protocols can increase node latency noticeably, potentially by rules like “add 10 ms for every three inbound 1 Gbps+ links,” especially if internal queuing and scheduling algorithms are triggered.

· Traffic Load & Congestion: Increased concurrent traffic creates queuing and can compound processing delays, magnifying overall latency.

· Jitter and Stability: Variability (jitter) can affect latency-sensitive services, especially interactive and real-time tasks.

· Routing & Topology: Path selection, number of hops, and routing protocol behavior impact the cumulative delay.

Primary Components of Latency

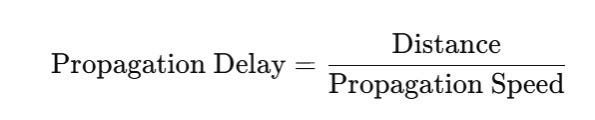

Propagation Delay

Determined by the physical medium and the speed of signal transmission.

· Fiber: ~200,000 km/s (≈ 2/3 speed of light in vacuum)

· Copper: ~160,000 km/s

· Free-space (RF/Optical): ~300,000 km/s

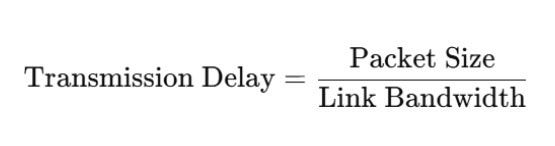

Transmission Delay

Time required to push all packet bits onto the wire.

For large packets on low-bandwidth links (e.g., HF radio), this can dominate.

Processing Delay Time spent examining packet headers, routing, and executing network stack operations. Influenced by router CPU performance, queuing policy, and routing complexity.

Queuing Delay The most variable component, dependent on traffic load and congestion. Queuing increases exponentially as link utilization approaches saturation.

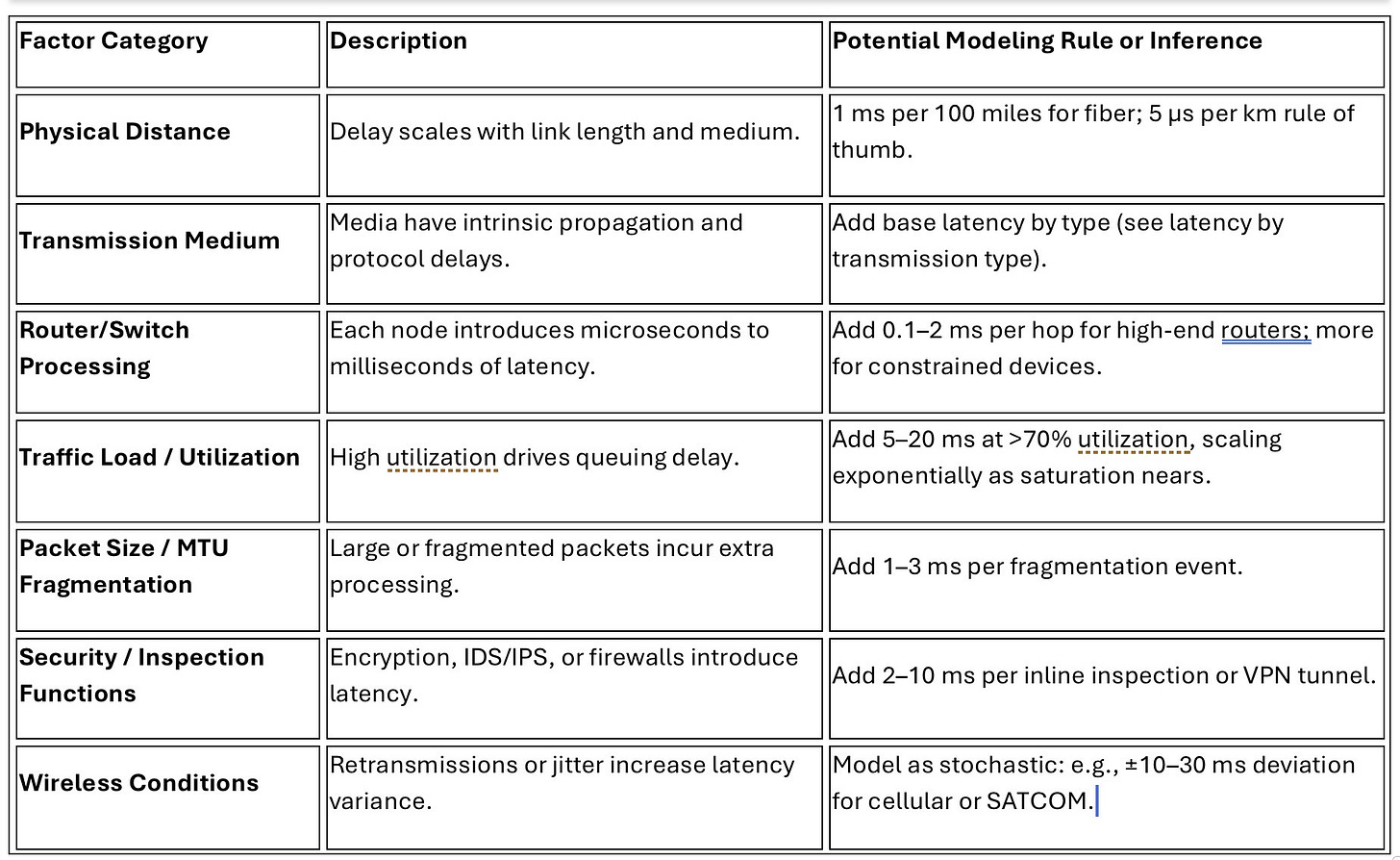

Latency Factors in a Network Model

A realistic network model must combine deterministic physics with empirical or rule-based behaviors. Below are categories to model latency more accurately:

You can define rule sets to approximate real-world effects. For example:

“If three or more inbound links >1 Gbps converge at a router with CPU <2 GHz, add 10 ms node latency due to queue contention and buffer processing.”

These heuristics become part of a “network behavior library” that can be tuned with observed data.

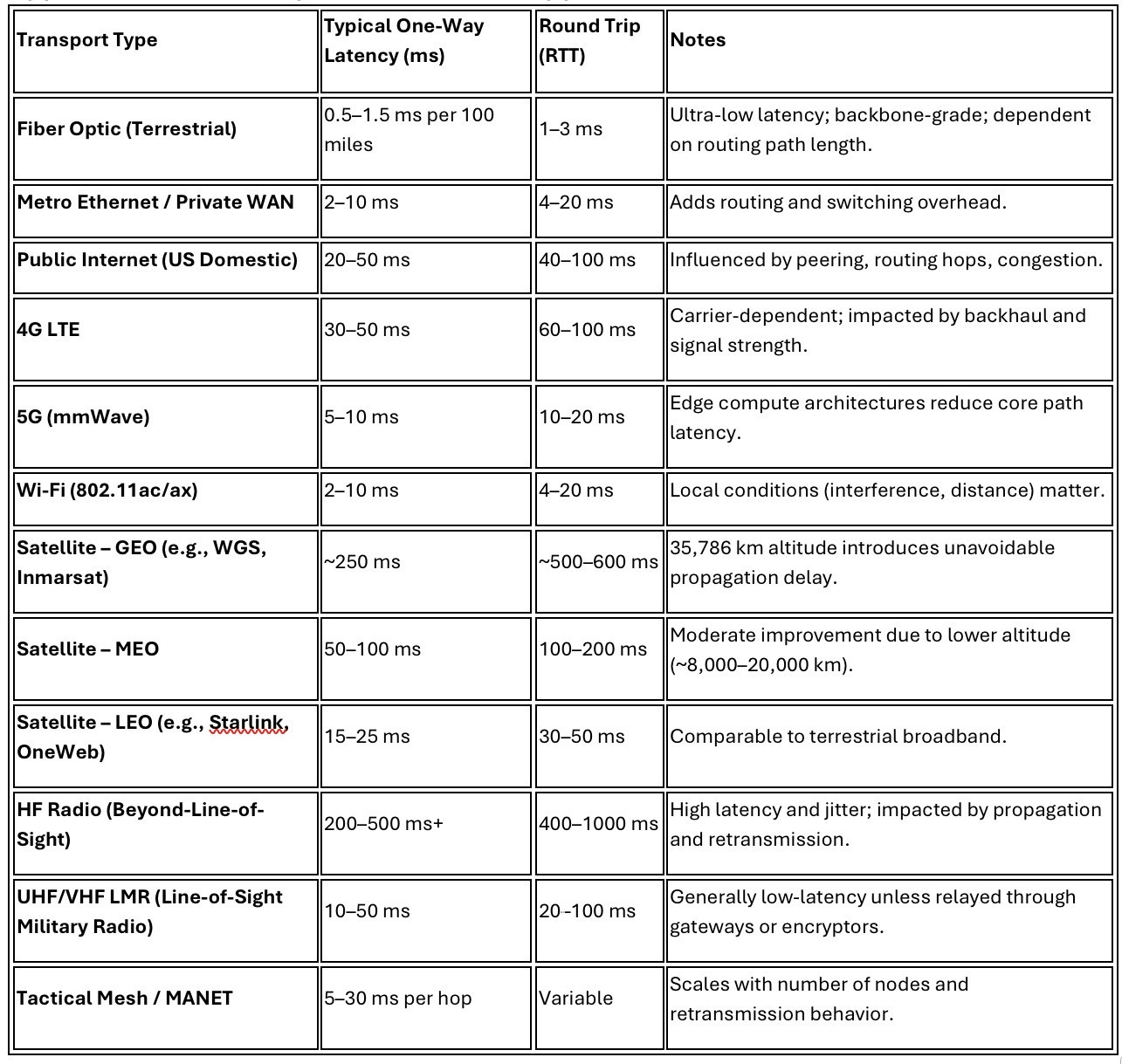

Typical Latencies by Transmission Type

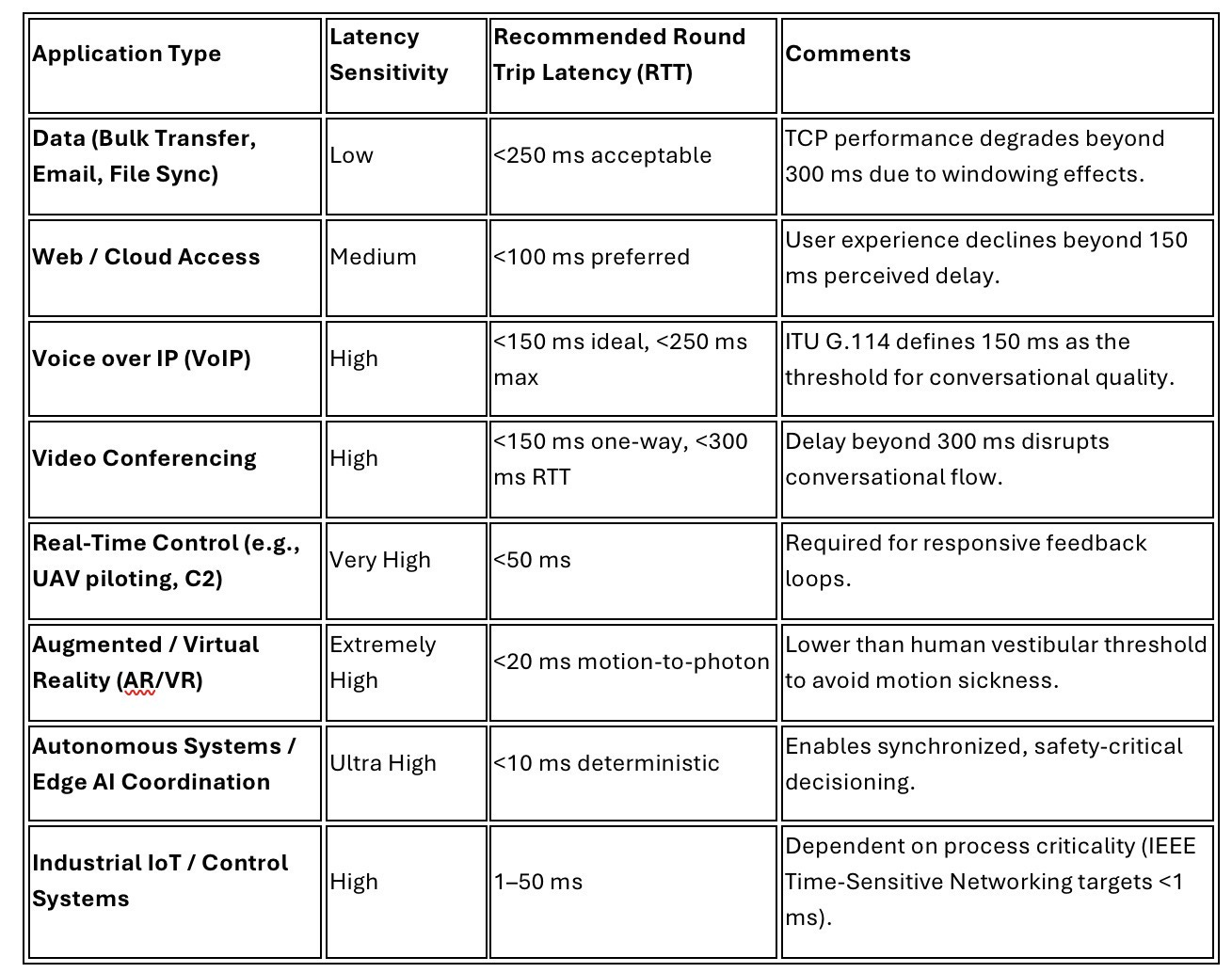

Application-Specific Latency Expectations

Implications for Modeling and DoD Systems

For mission networks (JADC2, C5ISR, or tactical edge), latency is not merely a performance measure; it defines operational feasibility. Command loops, sensor fusion, and fires coordination depend on deterministic, low-latency transport.

Modeling latency in such environments requires a multi-layered approach:

1. Base Physics Model: Derived from link types and geographic topology.

2. Behavioral Rules: Captures node processing, queuing, and policy effects.

3. Stochastic Variability: Reflects real-world volatility in wireless and contested environments.

4. Mission Overlay: Maps latency tolerance per mission thread or data type.

With these elements, you can simulate the “effective latency envelope” of a network under varying conditions and determine if mission objectives (e.g., <50 ms C2 decision cycle) can be achieved.

Recommendations for Modeling Latency

· Rules for modeling node and link latency should combine fixed media-based physical delays with dynamic components (traffic, routing, aggregation).

· Using simple additive rules (e.g., +10 ms per 3 aggregated high-capacity links) can be viable as long as they are grounded in measured or observed performance, but must be calibrated for congestion and topology complexity.

· For mission-critical and military/enterprise networks, continuous monitoring and adaptability are advisable, as real-world latency is non-linear and highly context-dependent.

Properly understanding and modeling latency is essential for architecting high-performance networks, designing resilient communications, and ensuring user satisfaction for demanding applications such as immersive AR or real-time voice/video.

Summary

Latency is not a single metric but a system outcome shaped by physical, logical, and behavioral variables. In modeling it:

· Start from known constants (propagation physics).

· Add rules for inferred processing and queuing delays.

· Introduce stochastic or policy-based adjustments for traffic, routing, and security.

· Map outcomes to application-specific thresholds.

This approach creates a credible analytical foundation for network performance engineering and can be extended into AI-based latency prediction models using telemetry from observability tools or network digital twins.